👋 About Me

Hi! I’m a Ph.D. student at Xiamen University, working under the guidance of Liujuan Cao and Rongrong Ji. I also collaborate closely with Prof. Yiyi Zhou and Prof. Yongjian Deng (Beijing university of technology).

My research interest includes multimodal and efficient training. If you’re interested in my work or have ideas for collaboration, feel free to reach out via email.

🔥 News

- 2025.12: 🎉🎉 Paper “EvSAM: Segment Anything Model with Event-based Assistance.” accepted by ACM TOMM 2026!

- 2025.09: 🎉🎉 Paper “Event-based Video Interpolation via Complementary Motion Information” accepted by EAAI 2025!

- 2025.09: 🎉🎉 Paper “EPA: Boosting Event-based Video Frame Interpolation with Perceptually Aligned Learning” accepted by NIPS 2025!

- 2025.03: 🎉🎉 Paper “Neuromorphic event-based recognition boosted by motion-aware learning” accepted by Neurocomputing 2025!

- 2024.06: 🎉🎉 Paper “Video Frame Interpolation via Direct Synthesis with the Event-based Reference” accepted by CVPR 2024!

- 2024.04: 🎉🎉 Paper “SAM-Event-Adapter: Adapting Segment Anything Model for Event-RGB Semantic Segmentation” accepted by ICRA 2024!

📝 Publications

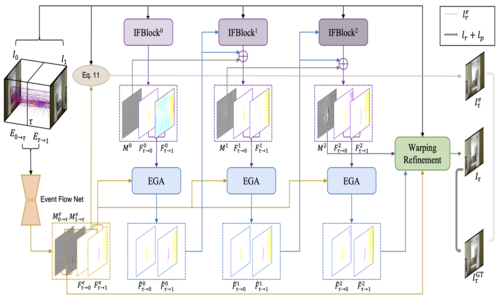

Event-based Video Interpolation via Complementary Motion Information

Yuhan Liu, Linghui Fu, Hao Chen, Zhen Yang, Youfu Li, Yongjian Deng

We propose a novel end-to-end learning framework to explicitly leverage the complementary characteristics of event signals and frames for event-based video interpolation. Our method synergistically fuses dense contextual information from frames with sparse but precise edge motion from events via a proposed Edge Guided Attention (EGA) module and introduces an event-based visibility map to adaptively mitigate occlusions during the warping process.

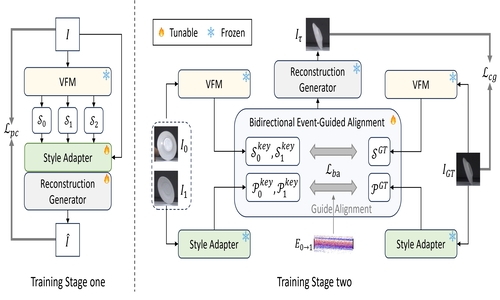

EPA: Boosting Event-based Video Frame Interpolation with Perceptually Aligned Learning

Yuhan Liu, Linghui Fu, Zhen Yang, Hao Chen, Youfu Li, Yongjian Deng

We propose a novel framework, EPA, which transfers the learning paradigm from an unstable pixel space to a semantically aware feature space insensitive to degradation, in order to overcome the problem of reduced model generalization ability caused by image degradation in training data.

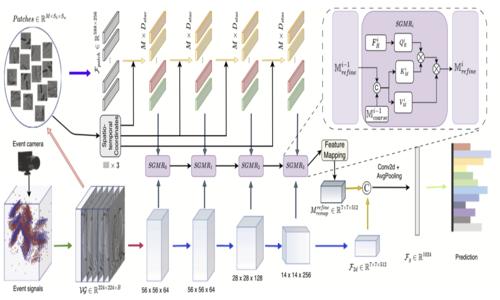

Neuromorphic event-based recognition boosted by motion-aware learning

Yuhan Liu, Yongjian Deng, Bochen Xie, Hai Liu, Zhen Yang, Youfu Li

We propose a motion-aware learning framework that addresses the inability of traditional 2D CNNs to effectively encode motion for event-based recognition. Our method introduces an auxiliary Motion-Aware Branch (MAB) to learn dynamic spatio-temporal correlations, providing complementary motion-centric knowledge that is then fused with the rich spatial semantics from the primary CNN branch for a more robust and comprehensive representation.

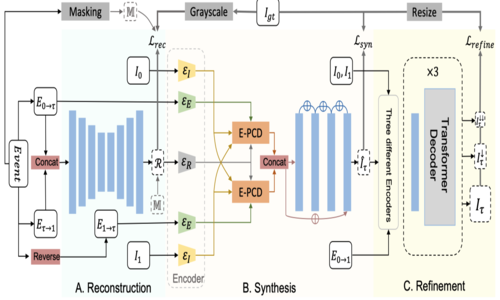

Video Frame Interpolation via Direct Synthesis with the Event-based Reference

Yuhan Liu, Yongjian Deng, Hao Chen, Zhen Yang

This paper introduces a novel E-VFI framework that obviates the need for explicit motion estimation by directly synthesizing the target frame. We achieve this by first reconstructing a structural reference of the intermediate scene solely from event data, and then propose a deformable alignment module (E-PCD) to align keyframes to this reference, which significantly enhances the model's ability to handle severe occlusions where traditional motion-based methods typically fail.

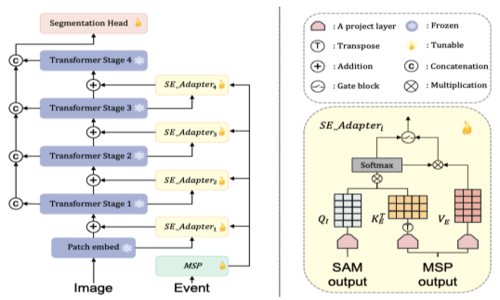

SAM-Event-Adapter: Adapting Segment Anything Model for Event-RGB Semantic Segmentation

Bowen Yao, Yongjian Deng, Yuhan Liu, Hao Chen, Youfu Li, Zhen Yang

This paper introduces SAM-Event-Adapter, a novel and parameter-efficient method to adapt the Segment Anything Model (SAM) for multi-modal Event-RGB semantic segmentation. By freezing the powerful SAM backbone and inserting lightweight adapter modules, we effectively fuse motion cues from event cameras with texture information from RGB images, significantly improving segmentation performance in adverse conditions while avoiding the prohibitive costs and generalization loss associated with full model retraining.

🎖 Honors and Awards

- 2025.06 Received the title of Outstanding Graduate of Beijing University of Technology.

- 2024.09 Received National Scholarship.

- 2022.10 National Second Prize in the Chinese College Students Computer Competition.

- 2022.05 Provincial Second Prize in the Chinese College Students Computer Software Design Competition.

📖 Educations

- NOW, pursuing a Ph.D. at Xiamen University (MAC Lab).

- 2022.09 - 2025.06, Master’s Degree, Beijing University of Technology (DMS Lab).

- 2018.09 - 2022.06, Bachelor’s Degress, Huaqiao Univsersity.

🤝 Collaborators

- Yongjian Deng - Beijing University of Technology.

- Yurun Chen - Zhejiang University.

💻 Services

Conference Reviewer

- IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

Journal Reviewer

- IEEE Transactions on Circuits and Systems for Video Technology (TCSVT)